let

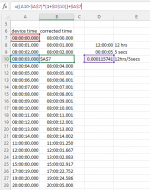

Source = #"AP-read",

#"Removed Top Rows" = Table.Skip(Source,4),

#"Promoted Headers" = Table.PromoteHeaders(#"Removed Top Rows", [PromoteAllScalars=true]),

#"Removed Other Columns" = Table.SelectColumns(#"Promoted Headers",{"Speed (km/hr)", "Wind Speed (km/hr)", "Timestamp", "Air Dens (kg/m^3)"}),

#"Renamed Columns" = Table.RenameColumns(#"Removed Other Columns",{{"Speed (km/hr)", "AP.speed"}, {"Wind Speed (km/hr)", "AP.wind_speed"}, {"Timestamp", "AP.timestamp"}, {"Air Dens (kg/m^3)", "AP.air_dens"}}),

#"Replaced Value" = Table.ReplaceValue(#"Renamed Columns","Z","",Replacer.ReplaceText,{"AP.timestamp"}),

#"Changed Type" = Table.TransformColumnTypes(#"Replaced Value",{{"AP.speed", type number}, {"AP.wind_speed", type number}, {"AP.timestamp", type datetime}, {"AP.air_dens", type number}}),

#"speed km/hr->m/s" = Table.TransformColumns(#"Changed Type", {{"AP.speed", each _ / 3.6, type number}}),

#"wind_speed km/hr->m/s" = Table.TransformColumns(#"speed km/hr->m/s", {{"AP.wind_speed", each _ / 3.6, type number}}),

#"timestamp ->XL" = Table.TransformColumnTypes(#"wind_speed km/hr->m/s",{{"AP.timestamp", type number}}),

#"timestamp XL->Garmin" = Table.TransformColumns(#"timestamp ->XL", {{"AP.timestamp", each (_ - 32873)*86400, type number}}),

#"Changed Type2" = Table.TransformColumnTypes(#"timestamp XL->Garmin",{{"AP.timestamp", Int64.Type}})

in

#"Changed Type2"